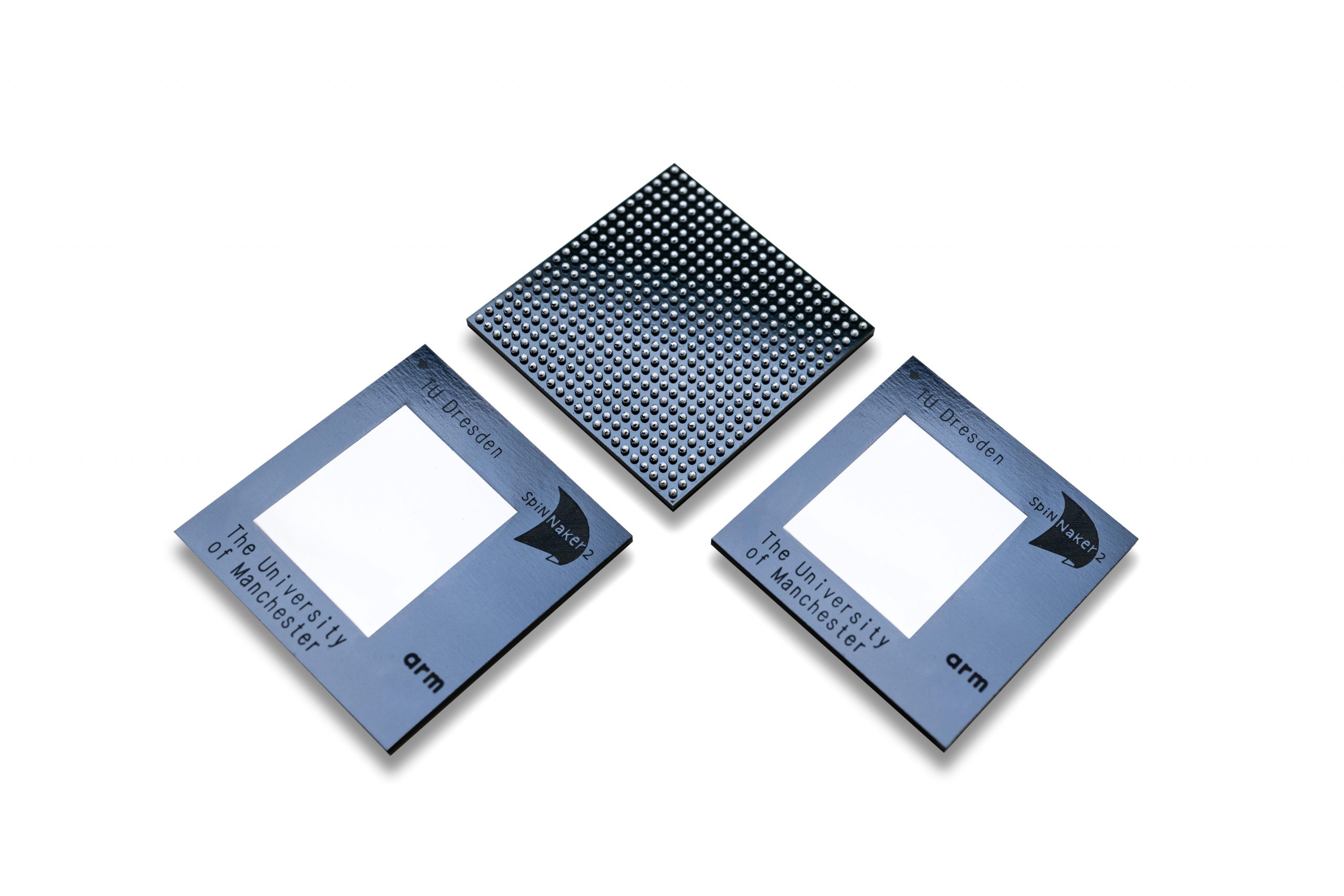

SpiNNaker2

The SpiNNaker2 is a massively-parallel system incorporating 152 ARM processors with powerful native accelerators to support the efficient implementation of sparsity-aware artificial neural networks, symbolic AI, and spiking neural networks. SpiNNaker2 is the atomic hardware building block of our large-scale system, the SpiNNcloud.

Architecture

SpiNNaker2‘s biological inspiration becomes immanent in the chip’s architecture. Distributed processing elements work asynchronously, enabling massively-parallel event-based and sparse computation on-demand power consumption. The foundation of our asynchronous operation is SpiNNaker2‘s patented light-weight communication system.

Features

- The chip is designed to reduce power consumption during idle times drastically, when compared to peak performance. Each processing element is running autonomously, allowing power control with Dynamic Voltage and Frequency-Scaling (DVFS) per element, which ensures fine granular power consumption on-demand.

- A light-weight Network-on-Chip distributes information across the chip, supporting simple input and output streaming of data with a minimum latency.

- The central SpiNNaker2-router allows for selective broadcasting of information from a sending PE to multiple receiving, reducing network traffic drastically.

- 4 universally programmable processing elements form a Quad-PE with low latency connection, leveraging allocation of information for fast and efficient data exchange.

- Dedicated AI accelerators for each PE boost the chip’s performance on cutting-edge AI applications.

- Brain-like accelerators enhance computational performance for biologically inspired networks. Hence our system provides native support for hybrids of traditional AI and spiking networks.

- A variety of standard interfaces such as Ethernet ensure a simple integration of our chip into existing systems. This flexibility ensures that every AI application with real-time requirements can leverage the power of SpiNNaker2.

- Large external memories can be addressed by multiple high-bandwidth interfaces for memory intensive computations.

- Applied sparsity leverages the potential of the low idle power consumption by reducing the number of computations drastically. Regardless of the model size applied sparsity also reduces network communication up to 90%, leading to further power savings.

- The fast chip2chip links used to interconnect the SpiNNaker2 chips ensure the real-time response when scaling up. This novel combination of network topology and high-speed periphery enables the assembly of large size SpiNNclouds.

The combination of all these features play a crucial role in achieving our holistically fast large-scale system.

“Anyone can build a fast CPU. The trick is to build a fast system.”

– Seymour Cray